The Craftsman’s Approach to Building With AI

The first of a two part TruthByte retrospective

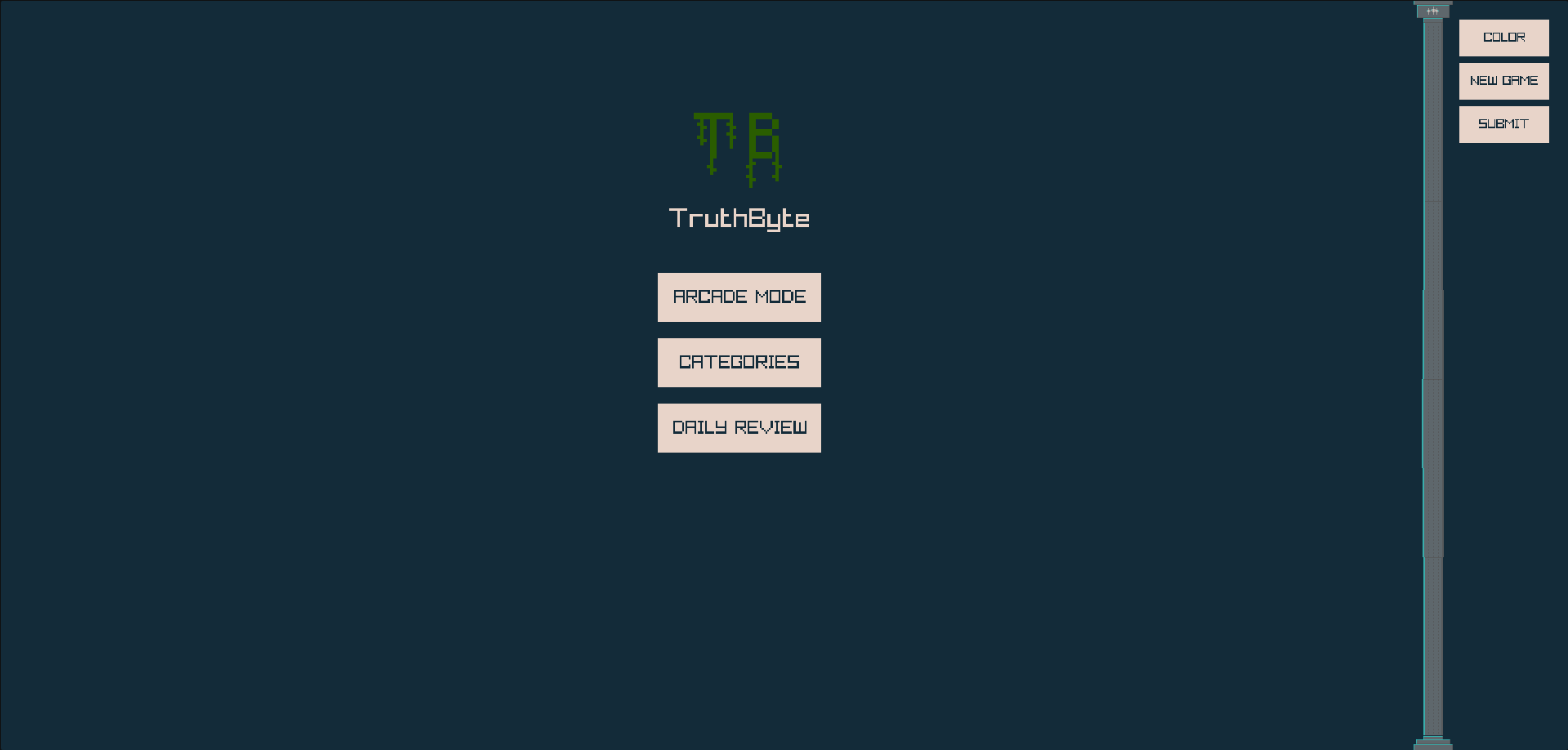

Last month, I built TruthByte—a Zig + WASM trivia engine with a serverless trust-scoring backend—for the AWS Lambda Hackathon. It’s a tiny game with a big goal: crowdsourcing true-or-false questions to improve AI evaluation datasets.

What good is training a model on your domain if you don’t test it?

But this post isn’t about the game.

It’s about how I built it—using AI to learn, set up, build, deploy, implement, and debug.

And more importantly, how I measured that experience after the fact.

⚠️ Small Disclaimer

I haven’t tried fully agentic development.

I don’t have a Swiss Army knife of MCPs.

You won’t catch me prompting some Unity-literate swarm agent to build a game for me in the background.

That stuff is cool—and worth exploring—but this post is grounded in something more tactile.

🛠️ The Craftsman’s Approach

I don’t treat AI as autopilot. I treat it like a lathe or a bandsaw: powerful, precise, and incredibly dumb unless guided well.

I’ve had a ChatGPT Pro subscription for a while, and I dabbled with Cursor’s free trial a few months back. This project was my first time going all in—a full AI-first build.

Here’s how the tools broke down:

- ChatGPT → longform ideation, architecture planning, mermaid chart generation, prompt generation, and contextual guidance

- Cursor + Claude (Sonnet) → in-editor pairing, implementation, bug fixing, rapid iteration

I view this as the craftsman’s approach to AI:

You show up with a vision.

You stay in the loop.

You use AI to amplify your effort—not to avoid effort entirely.

You learn in one tool, and build in the other.

It’s like playing a video game: your hands and your head are engaged the whole time. Don't be lulled into being a scrub by autocomplete or not reading the generated code.

I already had the fundamentals—experience with AWS Lambda, deployment tooling, and a new found love of writing Zig.

I knew what I was building. I knew why.

So I walked the AI toward the right solutions, correcting course when needed.

AI made me faster, not lazier.

And that brings me to the mantra that guided this whole thing:

Slow is smooth, and smooth is fast.

When I moved with wisdom and intention, the work came naturally. When I got careless or lazy, the agent would slow development down to a crawl to add emoji filled debug statements all over the place to gather more information. After a while, I took it as a sign: either I didn’t know my codebase as well as I thought—or the AI didn’t understand my intent. Even with powerful tools, handwriting good code still matters—because you’re the one setting the example. You're steering.

A moment I truly let go of the wheel? The data parsing scripts in ./scripts/.

That Python came together during a casual conversation in Cursor. It’s a little wild and not perfect, but it unblocked me with almost zero friction.

And that’s the point.

Sometimes, speed is about knowing where to be deliberate—and where to let it ride.

🔍 Overall Project Takeaways

The process followed a pattern I’ve come to trust:

- Plan Ahead

I leaned on GPT for architecture diagrams (Mermaid), deployment flows, and early scaffolding. Setting up the repo, getting the code base started, and making folders early helps AI later. - Model Choice

In Cursor, claude-3.5-sonnet and claude-4-sonnet became my primary models of choice for most tasks. I would occasionally switch to ChatGPT in Cursor for documentation. I did not like o3 for anything, but that's just me. Gemini seemed like Pepsi to Claude's Coca-Cola. I think each model is good for specific things. It's worth experimenting with them to find out what works for you. - AI-on-AI Prompting

I used my ChatGPT sessions to create prompts for Claude in Cursor. "I copy-pasted between tools like a composer directing a pit orchestra." – LOL AI writing is like the Soul Calibur announcer! Real talk–it works; I liked it most of the time. I'd create a series of prompts in ChatGPT and paste them into Cursor. Moderately high success rate for those tasks, but I can't measure vibes. - Cursor Rules

I found this over a week into development. It's worth doing. I used it to enforce coding style, documentation, and provide context for my project. I even put the overall blueprint for TruthByte's backend infrastructure in there at first since that's what I was building at the time. I don't have measurements here, but the difference was felt. - Scope

Am I working on the right thing?

I spent a lot of time ensuring that TruthByte worked on mobile devices. I could have skipped mobile, but it felt important. It's the most likely way someone will visit the website. - Recognize Pitfalls and Remediate

Are you getting mad at the process? Why?

I was asking myself this at times. I hadn't done enough legwork to make the AI do magic this time.

The AI wants to add debugging with a bunch of emojis, huh? That's usually the time to fix it yourself. - Testing

I didn't write any tests. It's a hackathon! Unfortunately, this made regressions really hard. The development of the next mode would sometimes break the previous mode, or the mobile rendering. I think a more serious code base wouldn't have these issues.

💡 Why This Matters

The craftsman’s approach proves a simple truth: AI can augment developer output. It’s handing them a new kind of power tool.

When you know what you’re building and why, AI becomes a force multiplier. You go faster, think deeper, and ship more without burning out.

But here’s the catch: you still have to steer. This workflow only works when you bring the vision, the judgment, and the willingness to say no to AI when it veers off track.

Ultimately, I feel that I thrived in times of hardship because I had access to several AI tools. My strengths lead the way, my blind spots shrunk, and the space for a more complete vision opened up.

That’s the future I’m betting on: human insight leading the charge—with AI shortening the path to realization.

🎮 Check Out the Final Product

🤝 Let’s Compare Stacks

If you’re building with AI—especially in dev workflows—I’d love to hear what tools, prompts, or patterns you’re using. I've yet to try Anthropic's Claude Code, and I'll probably like it. I’m working on a follow-up post with something a little different: measuring sentiment of myself and the AI across the many conversations we had in Cursor. It’s been wild—and I think you’ll dig it.

Let's grow!